mind over model

A field guide to intelligence, agency, and human leverage in the age of AI

In 1863, amidst the industrial progress in New York City, a seamstress voiced a pressing concern in the New York Sun: “Are we nothing but living machines, to be driven at will for the accommodation of a set of heartless, yes, I may say soulless people?” She captured the anxiety of countless workers facing the advent of the sewing machine. Tailors and seamstresses feared displacement as mechanized stitching began to outpace manual labor. Strikes and protests erupted with workers demanding fair wages and humane working conditions in the face of relentless mechanization.

This apprehension wasn't isolated. Dock workers in the 1860s refused to work near grain elevators. Economists of the 19th century debated whether innovation would ultimately liberate workers or leave them unemployed. With every leap (electricity, assembly lines, computers, the internet, and more) came a resurgence of the same fear:

"Will technology make human labor irrelevant?"

Today, AI feels like the culmination of that fear. It doesn’t just extend the hand, it simulates the mind. It can generate language, code, images, and even strategic plans. That feels existential. But just like every previous leap, AI is not erasing human value; it is changing where that value lives.

This piece is for builders, leaders, creatives, and technologists trying to make sense of that shift. It’s not a map, but a compass. We’ll explore:

The difference between AI that answers and AI that acts

Where today’s tools thrive, and where they break

What the next generation of opportunities will demand from us

A redefinition of intelligence for a new era

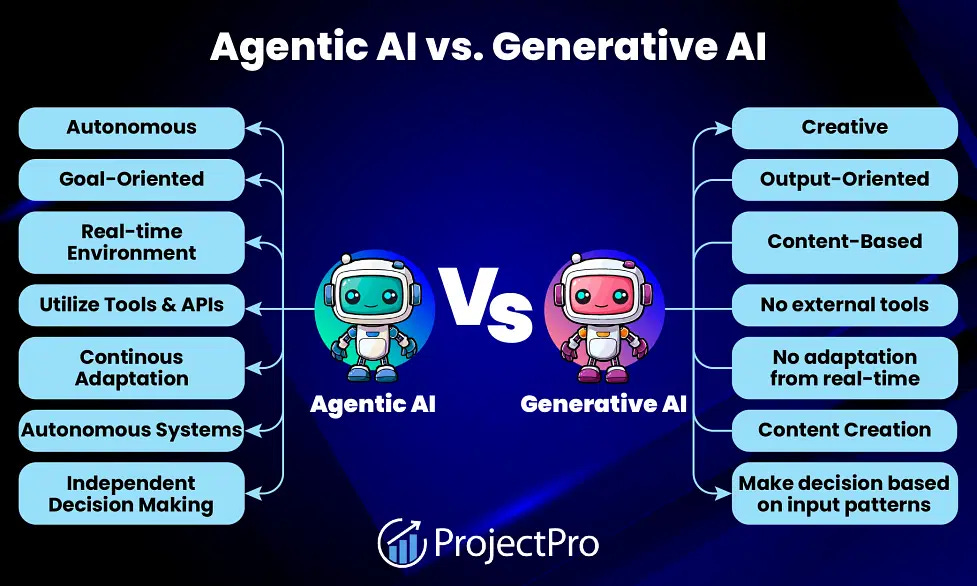

Two Modes of AI: Generative vs. Agentic

Most people use AI in a generative mode: ask a question, get an answer. This is how tools like ChatGPT or Claude work out of the box. These systems don’t remember, reflect, or plan. They’re useful for accelerating well-defined tasks (summarizing, coding, writing), but they operate in a vacuum. Each prompt is a reset.

Agentic systems are different. They can:

Plan a sequence of actions

Loop and iterate

React to changes in their environment

Maintain memory across steps

Coordinate subtasks toward broader goals

This distinction matters. Generative tools are powerful assistants. Agentic tools are collaborators. They introduce more opportunity, but also more risk. While generative AI boosts productivity, agentic AI transforms how we approach complex, dynamic problems.

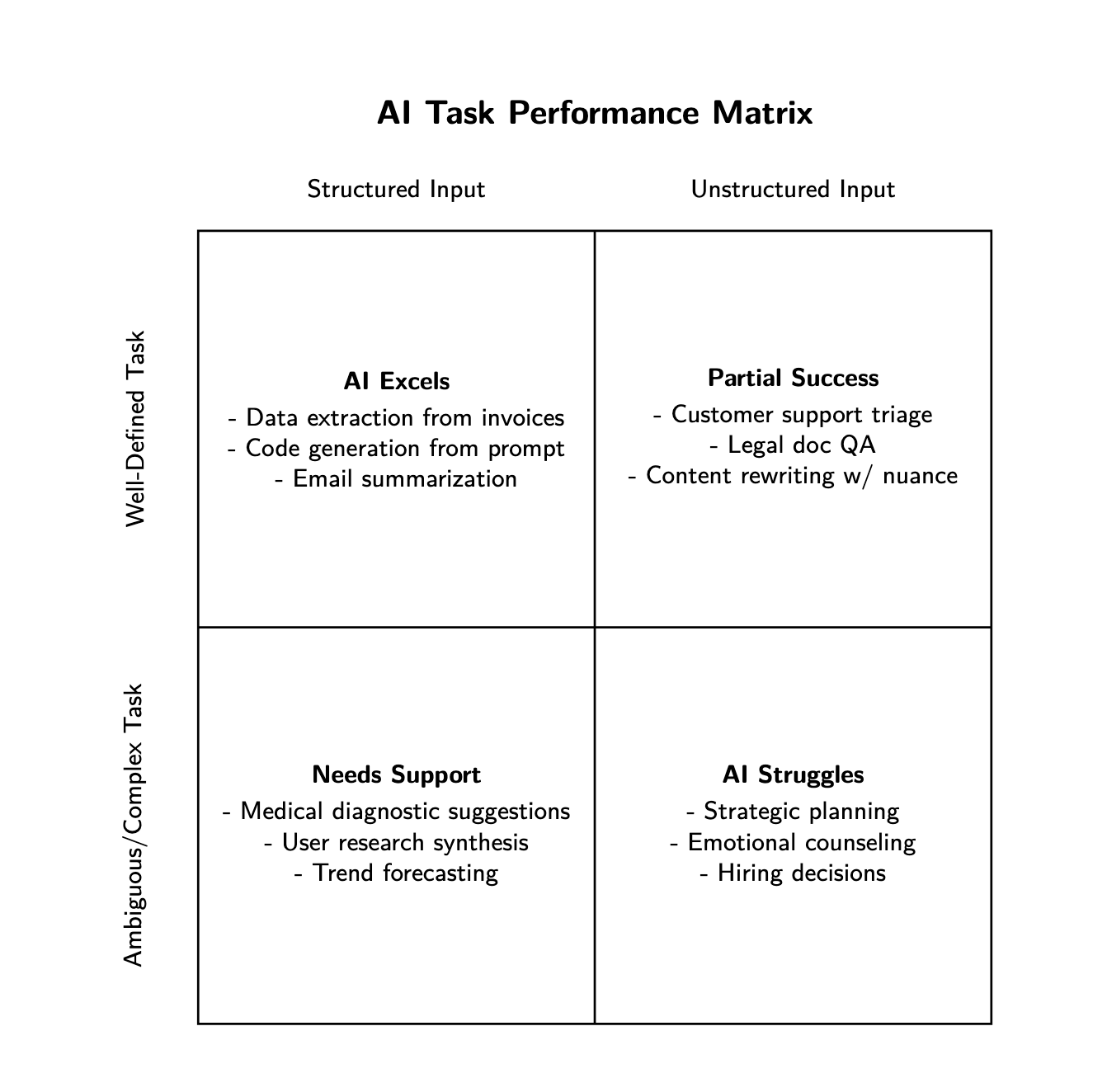

Where AI Feels Like Magic

LLMs and agents are highly effective when working with clear inputs, predictable outputs, and structured data. They work best when used as:

Accelerators for already-defined tasks

Scaffolds for early-stage creative or cognitive workflows

Interfaces between data and decisions (but not as replacements for judgment)

Examples:

Coding: Writing boilerplate code, refactoring, explaining code snippets, and agent-driven DevOps workflows

Text transformation: Summarizing articles, rewriting content, and translating language

Information retrieval: Answering questions from docs, generating FAQs, explaining concepts

Data manipulation: Parsing PDFs, structuring CSVs, extracting data from freeform sources

Research acceleration: Drafting outlines, proposing hypotheses, identifying gaps

Image/video generation: Prompt-based asset creation, image editing with guidance

These all share one thing: they don’t demand ambiguity, nuance, or judgment. They’re tasks AI can execute without needing to know when not to act.

Where the Magic Breaks

AI systems struggle when tasks require:

Real-time awareness

High stakes or ethical nuance

Long-term planning with feedback loops

Emotional or cultural sensitivity

The ability to defer or escalate uncertainty

Healthcare

IBM Watson for Oncology: Failed due to reliance on synthetic data, leading to unsafe recommendations.

Forward Health & Olive AI: Faced integration failures and patient safety issues. Shut down after limited traction.

Lesson: Synthetic or incomplete data, poor operational fit, and lack of trust can cripple high-stakes AI applications.

Hiring and Recruitment

Amazon Hiring Tool: Developed a bias against women and was scrapped for perpetuating inequality.

Lesson: Without fairness checks, AI can reinforce societal biases and erode trust in decision-making.

Public Sector

Teacher assessment & hospital triage tools: Misclassified top-performing teachers and prioritized healthy patients.

Lesson: In public services, AI failures can harm trust, especially for marginalized groups.

Tasks requiring real-time awareness

Gannett Sports Reporting: Generated awkward, error-filled content lacking local nuance.

Lesson: AI tools can often lack contextual awareness and struggle with timeliness and tone.

Tasks involving long-term planning with feedback loops

NFL Digital Athlete: Flagged wrong players as injury risks; failed to refine over time.

Lesson: AI struggles with long-term, iterative planning where outcomes depend on continuous feedback and model refinement.

Tasks requiring emotional nuance or cultural sensitivity

Klay Thompson AI Misinterpretation: Flagged basketball slang (“throwing bricks”) as vandalism, leading to a PR disaster.

Lesson: AI can easily misunderstand colloquial terms, idioms, or words that have different meanings within different contexts, creating room for miscommunication and harm for those in people-facing roles.

Tasks involving uncertainty

Robo-Umpiring in MLB: Overturned key strike calls incorrectly.

Lesson: AI struggles with knowing when to act or when to defer to human judgment, especially in ambiguous or high-pressure scenarios.

These failures aren’t about weak models; they’re about placing AI into contexts that demand nuance, judgment, or reflective reasoning, all of which current systems lack. Recognizing where AI falls short isn’t a condemnation. It’s a compass. The most important opportunities aren’t in trying to replace human judgment, taste, or coordination; they’re in designing systems that complement them.

This next section explores the real, durable opportunities emerging in the age of AI, where human awareness, decision-making, tacit knowledge, and intentionality become more valuable, not less, in a world filled with intelligent tools.

The Hidden Work of AI

1. Input Parsing

One of the most overlooked but critical challenges in any AI system is turning real-world messiness into structured, machine-readable inputs. The success of AI is predicated on data, but raw human experience doesn’t come pre-packaged as clean JSON. Someone still needs to parse it.

Examples:

Live sports stat entry (understanding what constitutes a "rebound" in basketball)

Field data collection in agriculture or conservation

Synthesizing insights from customer interviews

Journalism and real-time event reporting

Prediction: There will be a surge in demand for individuals who know how to work with unstructured data and turn it into usable formats. Those who understand how to map messy human data into machine-readable formats will be essential. Tacit domain knowledge will become a key differentiator. For instance, knowing what a "rebound" is in sports or how to detect a digitally fabricated event will matter more than ever.

Open Questions:

How do we reliably translate subjective, real-time events into structured formats?

Can models be trained to parse input without domain-specific nuance?

How will we detect real vs. AI-generated inputs based on digital signatures?

Can AI learn contextually from messy information, or does it always need structure?

Who owns the parsing layer of tomorrow’s workflows?

2. Edge Case Handling

AI systems can be right 90% of the time, but trust erodes when the remaining 10% leads to unpredictable or harmful outcomes. Just because a system nails the base case doesn’t mean it’s ready for adoption, especially in enterprise or high-stakes contexts. Scalability isn’t determined by peak performance; it’s determined by floor performance. Can the system be trusted when things go wrong? Can it fail gracefully?

Enterprises won’t build workflows around tools they can’t rely on. Users don’t stick with products that break in edge cases. The bottleneck to adoption isn’t the median case; it’s whether the system can handle ambiguity, exception, or escalation without introducing chaos.

Consider autonomous vehicles. Despite sophisticated AI and advanced sensor arrays, real-world unpredictability (like sudden weather shifts, construction zones, or erratic human behavior) has made it incredibly hard for these systems to scale. A self-driving car that performs flawlessly in perfect conditions but fails dangerously in edge cases doesn't inspire public trust or regulatory confidence. This is why autonomous vehicle rollouts have been slow, cautious, and often limited to geo-fenced areas.

Other domains face similar dynamics:

Finance: AI risk models that can't explain black swan exceptions won’t gain institutional trust.

Customer support: Chatbots that fail on outlier queries create more support tickets, not fewer.

Healthcare: Diagnostic tools that miss rare symptoms erode clinician confidence.

Examples:

AI misclassifying tone in sensitive customer service situations

Legal or medical misinterpretation of rare inputs

Prediction: Many startups and tools will fail to gain adoption, not because the AI doesn't work, but because it creates more complexity in edge cases. Tools that don’t handle edge cases will erode trust. Tools that recover gracefully will win.

Open Questions:

How do you give greater responsibility to black-box systems?

Can we design AI systems that escalate uncertainty instead of masking it?

What are the best practices for fallback behavior when predictions fall outside trained expectations?

3. Coordination and Scale

Even if AI makes individuals 10x more productive, there are still classes of problems that only large, well-coordinated teams can solve. AI doesn't eliminate the need for collaboration; it changes the shape of it.

Scale buys capabilities that are impossible at smaller sizes: global infrastructure, vertically integrated ecosystems, and cross-disciplinary R&D efforts. Think of Apple designing chips and phones while controlling distribution and privacy policy, or Google orchestrating satellite imagery, real-time traffic, and language translation across the globe. These required enormous organizational coordination.

But AI introduces a wrinkle: technology can curve scaling laws. In the past, impact scaled roughly linearly (or sub-linearly) with headcount. But now, a team of 5 with 50 well-orchestrated AI agents might outperform a traditional team of 100.

In the other direction, AI may allow already-massive organizations to scale in non-traditional ways, coordinating across thousands of internal tools and functions that used to break under communication and management load.

Examples:

Collaborative research teams using agent swarms to synthesize findings across domains rapidly.

Product teams orchestrating multiple specialized agents (e.g., summarizer, sentiment analyzer, QA bot) in real-time feature delivery.

Google-scale companies building and maintaining AI-driven platforms that update in real-time based on user feedback loops and live A/B testing.

Prediction: Just as remote work reshaped team structures, AI will reshape org design. We’ll see:

Ultra-scaled orgs (10x Google) will use AI to break traditional scaling limits, enabling coordination at levels current tools can’t support. This could unlock infrastructure megaprojects, world-scale simulations, global societal systems, and more.

Ultra-lean orgs (3–5 people) that outperform traditional companies by leveraging large networks of agents.

A new class of “agent managers” who coordinate not just humans, but agents, systems, and infrastructure across large or small teams.

Open Questions:

What does agent management look like as a discipline?

What kinds of problems still require large-scale human networks despite agentic leverage?

How do we audit, debug, and ensure accountability in complex, multi-agent systems?

What coordination problems will AI solve, and what new ones will it create?

4. Tacit Genius: Designing Flows That Work

LLMs and agents don’t magically solve complex problems. They’re not plug-and-play intelligence machines; they’re tools that need to be guided. To get meaningful results, you have to scaffold the problem, clarify the goal, break it into substeps, and design thoughtful workflows or prompts.

This is where tacit knowledge (the kind of deep, experience-based understanding that's hard to write down or teach) becomes crucial. It’s the difference between someone who has read a recipe and someone who knows how to improvise a great meal.

The most effective AI systems aren’t just the result of better models; they come from encoding human expertise into the design of the workflow itself.

Examples:

Agentic RAG (Retrieval-Augmented Generation): Instead of just retrieving documents and summarizing them in one step, agentic workflows break the task down: first search, then rank, then refine, then summarize. This multi-step approach consistently outperforms naive retrieval methods because it mimics how an expert would think through the problem.

Human-in-the-loop systems: In areas like legal summarization, software development, or customer service, AI can draft or suggest responses, but humans still review, tweak, or validate the output. These workflows are most powerful when domain experts help define what “good” looks like.

Therapy, coaching, DIY: For example, a performance coach who knows what questions to ask and how to tailor exercises for different types of people could build a better AI agent than someone who just asks ChatGPT to “be a coach.” It’s the sequencing, phrasing, and domain intuition that make the difference, and that comes from real-world experience.

Prediction: The future of AI won’t be decided by whether you’re using ChatGPT or Claude. It will be decided by who can design the smartest workflows. The real advantage lies with those who can encode deep, hard-won expertise into structured, repeatable task flows.

ChatGPT won’t beat Gordon Ramsay at cooking, not because it can’t follow a recipe, but because Ramsay knows when to break the recipe. That kind of expert intuition — knowing which steps matter, when to adjust, and why — is what separates a good system from an exceptional one.

Workflow design will become the new craftsmanship.

Open Questions:

In what fields will tacit knowledge remain king?

Can foundation models ever learn expert workflows without explicit decomposition?

Where do agentic approaches hit their limits?

What tools will emerge to help experts build agentic workflows without needing to code?

5. From Median to Extraordinary

AI is blowing open the gates of creation. Anyone can now write code, generate designs, launch websites, or compose music with just a prompt. But while the tools are powerful, they're also generic. They can produce something competent, but not something extraordinary. Extraordinary comes from human taste, vision, and craft. What’s finally changing is that those things no longer need to be filtered through deep technical knowledge to come alive.

Historically, technical fluency has been a bottleneck. That means the foundational layers of the internet, software, and even AI itself have largely been shaped by a relatively narrow demographic: engineers, often working on problems they understand personally. As a result, entire categories of human experience and creativity have been underserved, underbuilt, or overlooked entirely.

But now, the gates are cracking open. A chef can build an app. A therapist can build a tool. A writer can automate their creative workflow. AI is making technical scaffolding optional, and in doing so, it’s inviting the rest of humanity into the process of designing our digital world.

This isn’t the age of machine-built software. This is the beginning of the most human era of software we’ve ever seen.

Examples:

A chef building an AI-powered recipe site that captures the nuance of a regional cuisine they grew up with (something no generic food blog would ever get right).

A filmmaker storyboarding and scripting experimental films using generative tools, bypassing traditional studio constraints.

A small business owner automating back-office tasks with AI, then reinvesting that time to build more personalized and human relationships with their customers.

Prediction: We’re entering an era where creative fluency will matter more than code fluency. The builders of the next wave won’t just be engineers; they’ll be artists, therapists, teachers, small business owners, and visionaries who understand human needs deeply and can shape AI tools around them.

They won’t be creating software that looks like everything else. They’ll be creating tools that feel like them.

Open Questions:

How far can technical abstraction go? Will there always be a layer of tooling that only engineers can build?

What makes a contribution human in a world where machines can generate endlessly?

Can intentionality and taste be embedded into workflows, or are they the final frontier of irreducible human input?

Real Intelligence Is Calibration

One of the most profound forms of intelligence isn’t how much you know. It’s how accurately you understand what you know.

This is the insight behind the Dunning-Kruger effect: people with limited expertise often overestimate their abilities because they don’t yet know what they don’t know. In contrast, true experts tend to be more cautious. Not because they know less, but because they have a calibrated understanding of their own limits.

This is real intelligence. Not verbosity. Not confidence. But self-awareness. The ability to say: “I know this part well, and I know this other part is outside my scope.”

True mastery is the intelligence of boundaries. Of knowing the terrain of your own mind and being able to map the edges of your understanding. You’re not rewarded for how much you can say; you’re rewarded for how well your confidence matches reality.

And this is exactly where today’s AI systems fall short.

They generate fluent, confident responses, regardless of whether they’re right or wrong. LLMs don’t know when they’re bluffing. They lack calibrated self-awareness. They can’t raise a hand and say, “I’m not sure.” They present guesses with the same tone as facts. That’s not intelligence; it’s a liability.

But this isn’t just an AI problem. It’s a systems problem.

Consider the story of Sahil Lavingia, a tech founder who joined a short-lived U.S. government initiative called the Department of Government Efficiency (DOGE). Like many technologists, he entered government expecting bloated bureaucracy and quick wins for automation. Instead, he found something different:

“There was much less low-hanging fruit than I expected… These were passionate, competent people who loved their jobs.”

From the outside, it looked inefficient. But inside, it was full of highly evolved processes, built not out of laziness, but out of the need to handle complexity, edge cases, and tradeoffs that outsiders didn’t understand.

That’s the trap: from a distance, everything looks simple. Up close, you start to see the wisdom built into the mess.

In both public systems and AI systems, the greatest danger isn’t ignorance; it’s uncalibrated confidence. That’s why in a world increasingly filled with intelligent tools, the most valuable human trait is judgment: the ability to know when to trust a system, when to question it, and when to step in.

True intelligence doesn’t just generate answers; it knows when not to.

The Mind Behind the Machine

As AI tools become more powerful and more accessible, it’s easy to assume that leverage comes from picking the right plugin, framework, or foundation model. But that’s not where the real differentiation lies. The edge isn’t in having the right tools; it’s in knowing why they work, where they break, and how to build thoughtful systems around them.

This idea echoes a point made by Venkatesh Rao: having the right system is less important than having mindfulness and attention to how the system is performing.

A flawed system, guided by a reflective operator, will outperform a perfect one that’s blindly trusted. And that’s the real risk with AI right now: people stack tools — agents, APIs, wrappers — without understanding how they behave, where they fail, or what unintended consequences they may trigger.

The outputs may look slick. The interface may be natural. But if the critical thinking hasn’t improved, then nothing truly has. In this new era, what matters most — and will continue to matter — is the mind behind the machine.

That means:

Asking why a system behaves the way it does.

Watching for failure patterns and emergent behavior.

Keeping humans in the loop. Not because AI can’t automate tasks, but because feedback and reflection are the real drivers of progress.

It’s not about which model or tool you use. It’s about how thoughtfully you use it.

History tells a clear story: new technologies don’t eliminate human value; they shift where it lives.

AI is no different. Yes, it will automate tasks. Yes, it will reshape industries. But it will also unlock entirely new categories of work, from agent coordination to workflow design to AI-native creativity, for those who are willing to learn, adapt, and lead.

The most durable opportunities won’t go to those who simply use the tools. They’ll go to those who understand how the tools work, where they fail, and what uniquely human value they can amplify. Judgment, taste, curiosity, coordination, and emotional intelligence aren’t outdated traits. They’re becoming the core skillset of the modern builder, leader, and creator.

Because in the end, true intelligence isn't about what you can generate; it’s about what you understand, what you choose to build, and how wisely you wield the tools in front of you.

And that remains defiantly human.